The greatest terror of every agilist is that roadmap that just doesn’t move. Be it a product a project, a service, an initiative… team working, days passing, tasks moving to “done” and nothing of value being delivered. #thehorror

We’ve encountered some pretty intense scenarios, with tens of teams working on the same product, and sprint after sprint no feature gets finished, nothing a user could use, nothing that could be launched.

The productivity varied greatly among teams, but even for teams with good productivity the idea of “a working product in the client’s hands” was still a distant dream.

In order to deal with situations like this and so many others where we cannot afford to sit still, we combine three exercises with the goal of quickly helping your team gaining traction.

1 – Decantation Tank

We’ve talked at length before about the Tank, created by Danilo Risada. In this scenario, starting from the Tank is crucial since most teams have a hard time making their strategy tangible and connected with a tactical plan. Many teams find themselves working on tasks “because someone told them to”, a posture extremely harmful to the organization’s quest for results.

To see the Tank step by step, go here.

By the end of this exercise: The team will have clarity of purpose, of the business problems (client focus) they’ve got to solve, of the success metrics to solve these problems, and of the ideas that’ll probably compose your backlog.

2 – Hypotheses Matrix

To avoid this scenario, before filling a risk spreadsheet with a deluge of guesswork, we’ve created a tool to solve address this issue. It’s based on Marty Cagan’s work on product management, in Steve Blank’s Four Steps to Epiphany, in Eric Ries’ Lean Startup, and in Test Card hypothesis validation from Strategyzer.

To run the Hypothesis Matrix you’ll need:

a. 1 large sheet of paper (a2 or flipchart)

b. post-its

c. sharpies or similar

d. your team + stakeholders

e. a Decantation Tank done

Before we begin: This matrix is not a test your team must pass with a A+. There are teams that run this exercise and start answering que questions in the matrix with a “Yes” before even knowing what’s the point of it. This denotes a cultural dysfunction, an error safe environment, where people have no safe environment for errors. What we should strive for is an environment safe to error, where people can fail fast, learn fast and then achieve success faster.

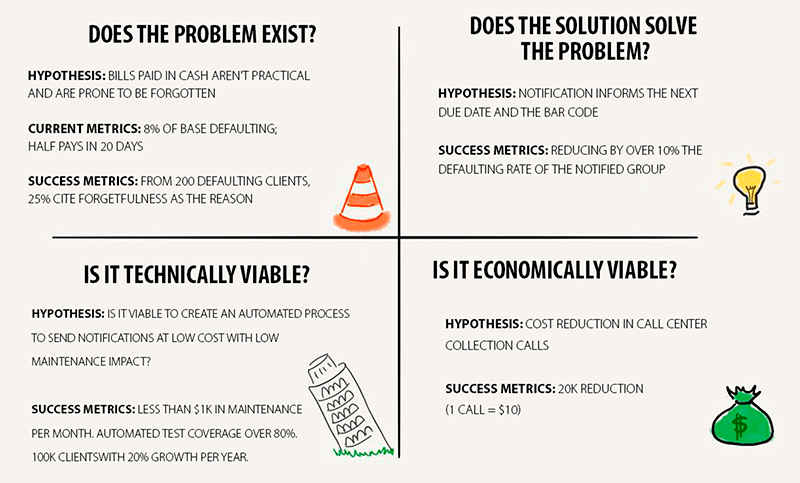

Does the problem exist?

Ask the team to choose an idea they’ve prioritised in the Tank and draw a cross section dividing the sheet of paper in four quadrants. In the first, ask them to write “Does the problem exist?”

Instruct the team to identify the problem connected to that idea and debate the metrics we have to make us state that such problem exists. Remind the team that, without metrics, there’s no certainty, only a supposition. In that case, actions must be identified to obtain the indicators that prove the existence of the problem.

The team must write in the problem and 1-3 metrics that demonstrate (or would demonstrate) it’s a real issue and it hurts bad enough to require a solution.

You can have 3-minute cycles using 1-2-all (as suggested by Liberating Structures):

cycle 1: Each one comes up with a metric (preferably from the client’s standpoint) that demonstrates the problem exists.

cycle 2: In pairs, people discuss and iterate the metrics.

cycle 3: The group brings together all the suggestions and chooses the final metrics.

Example:

Does the problem exist?

Issue hypothesis: Bills paid in cash aren’t practical and are prone to be forgotten.

How can we know? From a client base of 200k, we have a defaulting rate of 8% at due dates. Of these 16k clients, half still pays within 20 days overdue.

We’ll know cash is the problem if at least 25% of a 200 clients sample mentions forgetting the cash, the due date, or any other aspect of the payment process as a reason for defaulting.

ATTENTION: “X%” or “N” clients is a metric that demonstrates nothing. If the team comes up with something like this, pressure them to give a number, even if they’re not completely certain. Make them go after the correct number with other areas in the company and validate what they believe is true. This will give them knowledge they didn’t have about the product or service under their responsibility.

Does the solution solve the problem?

Ask the team to write the solution they thought about in the Tank. Now the same 1-2-all process will take place to find metrics that demonstrate that solution will solve the problem. Perhaps the problem metric is enough for that, but instigate them to think in shorter cycles.

Example:

Does the solution solve the problem?

Solution hypothesis: Issue notifications with the next due date and the bar code.

How can we know? We’ll send some form of notification (SMSs, for example) to clients who default often, reminding them of due dates and bar codes. We’ll be right if defaulting drops by at least 10% among the selected clients.

ATTENTION: In this scenario, teams tend to think of a final solution, like notifications through an app. But we don’t even know if receiving the reminder and the bar code will solve the problem, so we need to test that first. Then we can send SMSs with a bar code link to see if the client has internet access (this isn’t an issue to SMSs). If a client for some reason doesn’t have internet access, it makes no sense to proceed with the app solution.

Is it economically viable?

The exact same process will then happen for engagement and economic viability. We have to know the financial return that’ll justify our efforts, or it’ll be mostly useless. Most teams have no idea of this, and this lack of knowledge usually leads to a disregard for wasteful decisions.

Example:

Is it economically viable?

Hypothesis: Today each collection call in these cases costs $10. If we can make it so 25% of those who pay within 20 days no longer need to be called, it’ll amount to 2k less calls a month. $20k monthly in saved costs, $240k a year.

We’ll consider this a success if the cost/effort to maintain this solution is less than $240k a year.

Is it technically viable?

Once we know clearly what we need to validate regarding this problem, its solution, and the ROI, we can use the same 1-2-all process to discuss the technical criteria to consider that a successful solution.

Example:

Is it technically viable?

For it to be technically viable we need to build an automated message sending process. For the first slice, we can hire an SMS sender to test the hypotheses, but to incorporate that into a company platform, we’ll consider it technically viable if the post-construction monthly cost after building remains below $1k and we can keep automated test coverage at 80% so code maintenance costs can be kept to a minimum. Furthermore, the solution must be resized to 100k clients per month, with 20% growth per year.

By the end of this exercise: Right out of the bat you’ll have mapped the 4 main risks related to the investment to solve the problem. There will be others, of course, but these are the most important to avoid wasting effort and money in an inadequate solution. It’s absurdly common that we focus only on risks to the execution of the solution, when in fact the greatest risks to a strategy are hypotheses we don’t (in)validate. You may repeat this process for prioritized ideas

3- Dependencies Map

And lastly, we have to map one of the biggest reasons for the roadmap to stall: dependencies! We usually strive to eliminate dependencies, but that’s not always viable. Besides, be it to eliminate them or to ensure they won’t become a problem, we have to keep them transparent to the organization. This is the goal of this exercise.

To run the Dependencies Map you’ll need:

a. 1 whiteboard

b. post-its

c. sharpies or similar

d. your team + stakeholders

e. Decantation Tank and Hypotheses Matrix both done

f. optional: color tape (thin and broad)

Before we begin: this map is not a list of complaints. If your team is creating dependencies for everything, either we have some serious knowledge sharing work ahead of us or the team’s reinforcing expertise fiefdoms and acting less as a multidisciplinary team that must learn new things to solve complex problems. Aligning items with other areas is not a dependency, but a normal part of the job. A dependency means you need a joint delivery with someone else for what you’re doing to work.

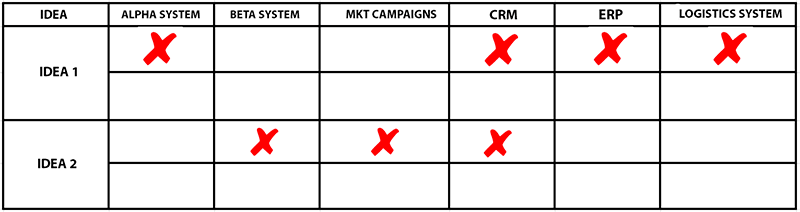

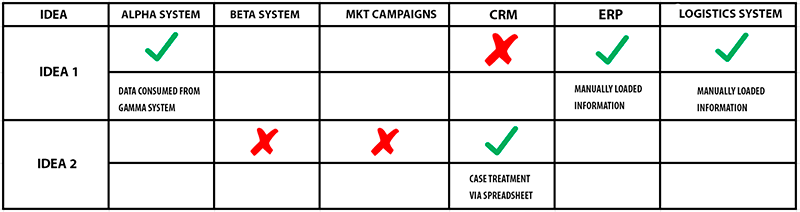

Ask your team to write an idea prioritized in the Decantation Tank on a post-it.

Then tell the team to list in a post-it all systems, areas, and other teams whose deliveries they depend on to make that idea work.

On the whiteboard, draw a table using this template:

The value delivered to business (Results metrics from the Tank, success metrics from hypotheses)

The most urgent risks (riskier hypotheses for business)

The necessary unblockings to begin delivering value (it makes no sense to deliver something in advance if there’s a dependency and the other part isn’t prioritized by the other team).

ATTENTION: All tools presented here must be continuously updated. To use them once and rush to execution without coming back to discuss what was learned during the execution and hypotheses user tests is extremely harmful. These tools, when properly updated, will help your team improve its strategy and results.