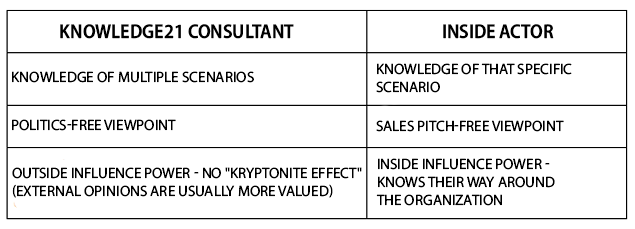

Unlike traditional consulting companies, Knowledge21 doesn’t employ standard recipes in Agile Transformation or Expansion. We understand that each scenario is unique, so we work side by side with a client in a combined effort to tailor our services to their needs. And of course, working side by side also leads to automatic alignment all throughout the process, minimizing risks for both sides.

Due to this strong client-Knowledge21 synergy, we’re constantly adapting to the client’s reality to deliver positively impacting results. We’re always in “continuous improvement mode”, which leads us to, in the face of new circumstances, create new team exercises and new tools in our day to day work within organizations.

One practice me and Luiz Rodrigues enjoy a lot within a process of individual and group development is provocation through reflection. You know, that moment when your eyes widen, something clicks, you realize something you’ve learned actually makes sense, and your head goes “HOLY SHIT!” How about leveraging these unique moments to create a tangible action plan with theoretical and practical support to achieve the desired state?

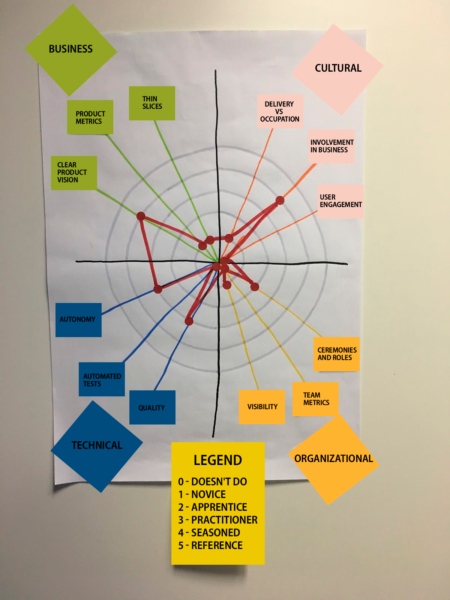

Team Coaching Assessment

Team Coaching Assessment is a reflexive and provocative tool facilitated by a knowledgeable coach with the goal of:

- Enabling the team’s self-assessment, so that gaps between their reality and the ideal scenario can be made visible to all members.

- Generating a prioritized Training/Learning Backlog based on gaps identified by the team.

- Conducting Agile Coaching sessions, combining theory and practice, about each prioritized item.

- Promoting daily sharing of learning, results, and next steps between all team members.

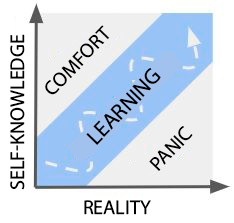

This way we find it possible to quickly create an environment conducive to experimentation, driving the team out of their comfort zone without causing stress through a healthy balance between challenge and safety.

Before presenting our assessment, however, it’s important that we align our expectations about this tool. It must never be used to compare teams or members, or to determine who’s doing best. We use assessment as a support tool to identify points to improve and achieve excellence. We reinforce practices and behaviors that positively impact people daily while creating an action plan to address issues that hinder the work to be done. The only comparisons we seek are between the team and itself, that is, in reassessing after a certain period the team reflects on its evolution throughout the last cycle and on how to improve in the next.

The information gathered can be directly used in team development, but also in broader organizational development if analyzed and employed in a systemic level. This way we may foster real knowledge exchange between teams, where practices in which each group is already a reference can be shared within the organization. We can incentivize teams and the whole organization to learn to learn.

Understanding the Scale

As soon as we came up with this self-assessment we decided to establish an easy scale through which people could readily situate themselves, where they are in that moment in relation to each topic.

To that end, we decided to create levels that captured the perceived importance of a certain practice in the context of that team, its use in day to day work, and the level of mastery to share what they’ve learned from experience.

So, the level characterizations we chose were:

- Doesn’t do: We don’t know how to put this to use in our practical context. It’s something new for our team or we don’t consider this to be relevant in our context.

- Novice: We’ve found this is relevant to our team. We’re on our first steps.

- Apprentice: This is definitely something we should take into account. We’ve decided to dive deeper into it. We’re doing our research and empirically finding out how to apply this.

- Practitioner: This is part of our everyday work. We do encounter a couple of challenges, but this is pretty normal stuff for us.

- Seasoned: I’ve been doing this long enough to say I know this well. We’re constantly discussing this at a certain level of maturity with other teams and other people. This is something our team will hardly stop doing; we believe this to be the best practice.

- Reference: We do this so well that if anybody wants to see how it’s done, they can just come here. We’re comfortable enough to give talks, workshops, etc about this.

Understanding the Business domain

This is the domain of efficacy, of subjects related to a company’s products and objectives, like return on investment (ROI) calculations for the product, its next features, prioritization, planning future releases, and eventually contracts in an Agile context.

This is where Product Managers or Product Owners go full SOB, but not the way you’re thinking. They slice, organize, and prioritize, offering direction to the work done in the organization.

Among the main points of reflection in this domain, we usually highlight:

- A clear vision of the product: Does everybody know exactly how the product should generate value? Can every team member come up with an elevator pitch for the product they’re working on? Is the relationship between each line of code and its impact on the company’s strategy clear for every team member? Can we clearly see the path we’re taking the product and the reasons for it?

- Thin slices: Are the business items worked on small enough not to be considered complex, but simple? Does starting and finishing a business item in a single day seem impossible, or is it normal? Do single work items generate business value or are they just technical pieces we’ll have to put together to generate that value later? How much is thin-slicing an everyday practice? Is the team always focused on the 20% work that’ll bring 80% of value or do they usually try for 100%?

- Product metrics: Does the team have the numbers that tell them if they’re hitting their target with each delivery? Is work guided by data on our users and their use of our products, or is it based on guesswork? Is funnel of conversion and pirate metrics terms the whole team understands, or are these new to them? Is the team guided by numbers or just by what the hierarchically superior considers to be better? Does all the team keep track of these numbers or just the P.O / Product Manager?

Understanding the Cultural domain

This domain has human relations as its main focus, and the empowerment level of people to make decisions about their work, promoting individual self-organization and motivation.

This domain also comprises two extremely important points of Agility: paradigm shift and continuous improvement. When addressing the Cultural domain, we usually talk about:

- Involvement in the business: How close the team is to business? Business language is spoken fluently or is the native Techish the only game in town? Is the P.O. the only access to Business or everybody in the team feels comfortable to seek business information outside the team? Does the team see itself as just a development team, or as a business team that just so happens to develop software?

- User involvement: Does the team know their users? Do they have a profile of those who use or will use their product? Do they have real feedback from those users.? Are they in direct contact with users to seek feedback? Is feedback consistently accessible to the P.O., or to the whole team?

- Delivery vs Occupation: It makes no sense for knowledge workers to be evaluated for the amount of hours they put in, nor for the number of lines of code written, for example. Is the team valued for worked hours or for value delivered and its impact on business? Is being busy still important to those who evaluate us?

Understanding the Organizational domain

This domain relates to how the company is structured and how teams work. Which methods does the team use? How’s the development cycle, the iteration process, the distribution of people? This is the domain of efficiency, but always in line with the efficacy from the Business domain.

We like to emphasize this relationship between efficiency and efficacy because, thanks to the strong influence of more traditional work mindsets, we’re often pressured to work faster without knowing why or even what impact we’re trying to have with our efforts.

From the main topics in this domain, we like to talk about:

- Team metrics: Which are the parameters to know if the team is improving with time? Does the team have numbers, graphics, and indicators to track their evolution? Are these numbers watched closely? Is it all for manager eyes only, or is the whole team responsible for it? Is it all tallied and analyzed by the manager, or by the whole team? Does your team have a Lead Time history? And a Cumulative Flow Diagram?

- Roles and ceremonies: Does the team perform the ceremonies from the methods or frameworks it uses? Is it done deliberately, understanding the purpose of each ceremony, or is it still done mechanically, to try to understand how it works? Are the roles adopted clear to all team members? Are such roles useful? Is it explicitly clear what’s expected from each role?

- Visibility: If somebody shows up at the team’s workplace, can they understand what’s happening? If somebody on the team takes a vacation, will they need to sit down and be updated by someone else on what’s going on or will they be able to, just by visibility, know exactly where each project is? Is visual information useful, or is it more like visual pollution? Is there too much old useless stuff on the walls?

Understanding the Technical domain

This domain aims to understand the team’s quest for technical excellence by focusing on how the work is done. Automation is crucial in this domain since it reflects on other technical subjects like quality, standards, tools, etc. In IT, good practices in software engineering brought on by XP are in the Technical domain.

- Quality: Is the team interested in caring for product health? Do they collect metrics to support this movement? Is code with bad smells commonly refactored? Do they regularly review code and work in pairs? Is the code clean or is supplementary documentation necessary to understand it?

- Technical autonomy: In technical aspects, does the team do what other teams, departments or people say or does it do what it sees as ideal? Does the team have technical autonomy to choose the best technical way of implementing systems? If they need to change code in other systems, do they have that autonomy? Is everybody on the team able to work on any code by the team without the need to ask for permission? Can the team choose which technologies to use?

- Automation: Does the team implement automated tests? Is thinking about automated tests a part of its culture? Do they test what they do in the most appropriate level for each context (unity, integration, service, interface, etc.), or is it manual for the most part? Do they have all the necessary knowledge to automate their tests? Do they also focus on automating repetitive work to be able to spend more time on creative work?

Conclusion

Agility is often misunderstood, leading organizations and teams to focus solely on speed. That’s why the 4 domains of Agility established by Knowledge21 help to understand the importance of balance between Efficacy (Business), Empowerment (Culture), Efficiency (Organizational), and Excellence (Technical). This creates high-performance teams that learn constantly through constantly delivering value to their users.

The use of Assessment in the 4 domains of Agility is an excellent opportunity to allow for a moment of collective reflection when team members will self-assess their own performance. Points of excellence and improvement will thus naturally emerge from their dynamics, allowing for beginner teams or even those who already have an Agility mindset to become aware of their present moment and understand how to better self-develop.

A good Facilitator with Agility experience can strengthen this meeting even more by fully exploring the four domains.